The last report covered the map

making and preparation for the following week activity. Then, this report refers

to the navigation activity itself occurred at the Priory on last Monday, March

4th. As said before, this is one piece of a bigger exercise that

consists in analyzing different ways to navigate. For this week, the method of

navigation was the use of a compass and a reference map. In the following

weeks, the navigation with a GPS unit will be covered. The map used for this

activity is the one produced in the last exercise by the group, along with the compass;

the space count will also be used.

Methodology

The activity consisted in

navigating over different levels of elevation, inside the woods, during the

formation of a snowstorm. Thus, appropriate preparation was necessary. The

first step was to dress properly to go to the field.

One important feature that was

not included in the maps yet was the course points where each group would need

to go to. The feature class for these points was not available on purpose, so

the class could practice the technique of plotting points in a map. Then, a

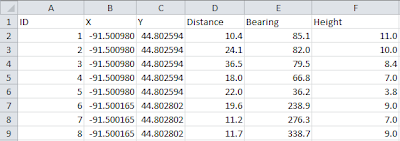

table with different UTM coordinates for each

point was given to the students, who started to plot the points in the map (Figure 1).

For that, the closest coordinate needs to be found in the grid. Then,

considering the distance between grid labels, the point should be apart from

the label at the necessary amount, which is an approximation.

After having the points correctly

plotted, it’s created a line between them to represent the path the group

should follow. To keep track of this path, it’s necessary to have a known

direction. For that, the compass was used to calculate the azimuth from/to each

point. The first step to acquire the azimuth value is to correct the compass

with the magnetic declination. Because Eau Claire has a declination close to

zero, this procedure wasn't necessary in this case. Then, the travel-arrow in

the compass is placed in parallel with the path. Holding the compass firmely, to avoid any movement, its housing is

turned to be in parallel with the map north. (Figure 2) This is not extremely exact since

you can only guarantee the precision by eye. One tactic is to use the lines

inside the compass to compare to the grid lines.

Having the values, a table with

initial point, end point, azimuth and distance was created to keep organization

of each path. However, only the first three fields were completed before the

exercise. Instead of using the estimated distance each path would take – which could

be done by having a ruler and calculating each path accordingly with the map

scale – the amount of steps would be written there after the group walked each

path.

In the field, to find the correct direction, it's necessary to turn the compass housing until the wanted value, and then turn yourself until the compass needle overlay with the compass housing "north". Then, the division of tasks

within the group would help to increase the efficiency. I was responsible for

holding the compass and guaranteeing the right direction by targeting a second

person – Kent - who would go as far as he could and then adjust his position accordingly

with my compass view. This model was chosen since the trees were almost indistinguishable one from another, so it would be hard to use it as a reference. After having

the right direction using these two people, the third group mate – Joel – would

walk counting steps to keep track of the distance already walked. When both

arrive at the reference – Kent – the procedure starts all over again. Until by

approximate calculations, it’s known that the course point was close. Following

these procedures, it would be possible to find the necessary points.

Discussion

Some issues related with the precision of the measurements need some attention: in the plotting procedure as well as in the azimuth taking. In the first issue, as it was said, it's extremely rare that points would fall exactly in the intersection of the grid lines. Then, it's necessary to rely on approximations. At this moment, the interval of grid lines shows its importance. Of course that a low interval can clutter the map, but a big interval also compromise the precision in this task: the closer the grid lines are, the more precision you will have. Considering that, the choice of the group of dealing with 20 meters intervals can be considered consistent.

It's possible, even with large intervals, to have a high precision plotting. After calculating the relation between the coordinate labels and the coordinate you need to find, using a ruler, you would plot in the exact place it should be. However, this procedure is time demanding and such precision was not totally necessary with a 20 meter interval.

The precision is subject to the matter also when calculating the azimuth. Since there's a ruler in the compass, it's possible to place it exactly in the parallel with the path. However, the positioning of north can only be measured by the eye. As said before, the existence of north-south lines inside the compass were useful to be compared with the grid lines, which increased the precision of the measurement. Although it's still not completely accurate, the error shouldn't be larger than 5 degrees, what doesn't compromise the navigation in small distances as the ones this exercise deals with. It can be a huge problem, though, when dealing with enormous distances, as in some centuries ago with the marine development.

There's also another problem that didn't compromise the navigation only because the distances were small. The professor advised that, although he asked for an UTM grid, the correct way to navigate with a compass is using the Geographic Coordinate System (GCS). The reason for that is that UTM doesn't have a true north, since all the lines are equally apart from each other. In the real-world scenario, the closer to the poles, the closer the lines would be from each other. That's the way GCS grid lines would be (Figure 3).

Another matter that could have been improved is the organization of the table made after the point plotting and azimuth taking. As said before, three fields were completed, but the fourth that would have the distance was used as a reference to keep notes of how many steps the group gave.

It would be interesting if instead of having the distance after walking through it, the group had the calculated distance from the line. Using the scale, it would be possible to have it in meters, and then, using the pace count of Joel, this could be converted to steps. Then, it would be easier to know how far the group were to the target point. Unfortunately, a ruler was not available at the time and the time was short to do this.

Another concern with the distance is that the pace count was obtained in a flat surface without snow. A totally different surface was faced in the field: steeps with about 30 cm of snow. The pace differs in this case, being usually smaller than in a regular surface. That means that the groups should walk more steps than the amount calculated.

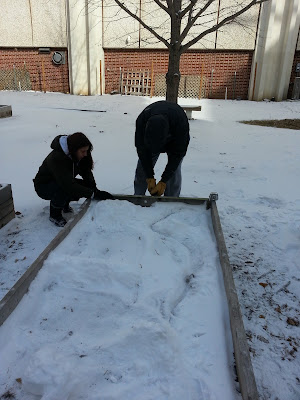

Also in the subject of the adverse conditions, it was possible to notice that more preparation need to be made to go back to the field. It was challenging to walk over the deep snow in the steeps, and since the exercise was inside the woods, the dense presence of branches was constant and frequently impacted the pathway of the group. (Figure 4) Rarely it was possible to follow a straight line, so a lot of times, it was necessary to contour any obstacle and go back to the right direction.

Following all the procedures mentioned until now, it was possible to get from the initial point to the second point, even though the group location was a little east misplaced. This fact brought the idea that some error might have been done in the direction.

In the field, the group discussed about the reasons for that, and it was thought that it was necessary to target the azimuth in longer distances. The reason for that is that if a small error is done in a first measurement when targeting it's carried on in all the next ones. Then, the less stops the group would made, the less error it would have.

With that in mind, the same procedure was made to find the second point. After walking the necessary distance, the group faced a deep ravine. Then, the map started to be analyzed to support the identification of the area. Unfortunately, a misinterpretation was made: by examining the contour lines, it looked like the point would be in the top of the ravine. (Figure 5) Then, one kept at the correct point, given by the compass, and the others would go look around to see if they would find the point. A long time passed doing that until Martin Goettl - one of the instructors - came and showed that the point was not on the top of the ravine, but on its bottom.

After analyzing better the contour lines, it was possible to notice that this was correct and could have been avoided. Two lessons were learned with that: do not doubt your compass - errors might be made, but they would not put you that far of track, as long as you keep careful with it. And also to analyze more carefully the information in the map, it doesn't help to have a very detailed map if the reader doesn't take the necessary time to interpret it.

At this point, it was already more than 5:00 PM, so Martin decided to take the group to the next point while still wasn't dark, and then go back to avoid the darkness.

Conclusion

The main learning obtained in this exercise was about the compass reliability. It was disappointing not to accomplish the goal of the project, however, it was important to make this mistake and recognize it. That way, it's guaranteed that the idea behind the error is understood and it won't be committed again. The idea of misplacement by user lack of precision is right, however, it wouldn't cause big consequences, so it's always essential to trust the compass and don't doubt that much the location you'll be placed.

The contour lines were part of the map to support the area identification, and they would be really useful if the correct analysis was made. Another lesson comes with that: only put information in a map if the reader is able to understand and interpret it correctly, otherwise it can be more confusing than helpful. In the case of this project, the users were knowledgeable about the interpretation of contour lines, and the problem was that it was necessary to be more careful in the reading. However, it's important to understand this relativity of information provided in a map, depending on the target audience. If something is supposed to be released for the public, it might not be a good idea to insert technical concepts and features.

For last, it's important to recognize the positive sides of using a compass and map: even inside the woods, the precision is not compromised. Sometimes, when inside a really dense forest, a GPS unit can have an error too high or even not acquire satellites enough to display the coordinates. However, technology keeps developing more and more to avoid these problems. The downside is that to navigate with compass and a map is a very time demanding technique. Nowadays, the world requires efficiency at a high rate, so more practical solutions replace this method.

Discussion

Some issues related with the precision of the measurements need some attention: in the plotting procedure as well as in the azimuth taking. In the first issue, as it was said, it's extremely rare that points would fall exactly in the intersection of the grid lines. Then, it's necessary to rely on approximations. At this moment, the interval of grid lines shows its importance. Of course that a low interval can clutter the map, but a big interval also compromise the precision in this task: the closer the grid lines are, the more precision you will have. Considering that, the choice of the group of dealing with 20 meters intervals can be considered consistent.

It's possible, even with large intervals, to have a high precision plotting. After calculating the relation between the coordinate labels and the coordinate you need to find, using a ruler, you would plot in the exact place it should be. However, this procedure is time demanding and such precision was not totally necessary with a 20 meter interval.

The precision is subject to the matter also when calculating the azimuth. Since there's a ruler in the compass, it's possible to place it exactly in the parallel with the path. However, the positioning of north can only be measured by the eye. As said before, the existence of north-south lines inside the compass were useful to be compared with the grid lines, which increased the precision of the measurement. Although it's still not completely accurate, the error shouldn't be larger than 5 degrees, what doesn't compromise the navigation in small distances as the ones this exercise deals with. It can be a huge problem, though, when dealing with enormous distances, as in some centuries ago with the marine development.

There's also another problem that didn't compromise the navigation only because the distances were small. The professor advised that, although he asked for an UTM grid, the correct way to navigate with a compass is using the Geographic Coordinate System (GCS). The reason for that is that UTM doesn't have a true north, since all the lines are equally apart from each other. In the real-world scenario, the closer to the poles, the closer the lines would be from each other. That's the way GCS grid lines would be (Figure 3).

|

| Figure 3 - In the top a UTM grid for the United States; in the bottom, a GCS grid. The second has a curvature representing the true north of the map, while UTM doesn't. |

It's necessary to have that because the compass relies on a true north, that is the one being showed by the needle. If your north is distorted by the map, that can compromise the accuracy of the measurements. Thankfully, since the area of interest is small, the curvature of the grid lines wouldn't be too high, so this specific navigation was not compromised.

Another matter that could have been improved is the organization of the table made after the point plotting and azimuth taking. As said before, three fields were completed, but the fourth that would have the distance was used as a reference to keep notes of how many steps the group gave.

It would be interesting if instead of having the distance after walking through it, the group had the calculated distance from the line. Using the scale, it would be possible to have it in meters, and then, using the pace count of Joel, this could be converted to steps. Then, it would be easier to know how far the group were to the target point. Unfortunately, a ruler was not available at the time and the time was short to do this.

Another concern with the distance is that the pace count was obtained in a flat surface without snow. A totally different surface was faced in the field: steeps with about 30 cm of snow. The pace differs in this case, being usually smaller than in a regular surface. That means that the groups should walk more steps than the amount calculated.

Also in the subject of the adverse conditions, it was possible to notice that more preparation need to be made to go back to the field. It was challenging to walk over the deep snow in the steeps, and since the exercise was inside the woods, the dense presence of branches was constant and frequently impacted the pathway of the group. (Figure 4) Rarely it was possible to follow a straight line, so a lot of times, it was necessary to contour any obstacle and go back to the right direction.

|

| Figure 4 - Impact of natural issues on the navigation. |

Following all the procedures mentioned until now, it was possible to get from the initial point to the second point, even though the group location was a little east misplaced. This fact brought the idea that some error might have been done in the direction.

In the field, the group discussed about the reasons for that, and it was thought that it was necessary to target the azimuth in longer distances. The reason for that is that if a small error is done in a first measurement when targeting it's carried on in all the next ones. Then, the less stops the group would made, the less error it would have.

With that in mind, the same procedure was made to find the second point. After walking the necessary distance, the group faced a deep ravine. Then, the map started to be analyzed to support the identification of the area. Unfortunately, a misinterpretation was made: by examining the contour lines, it looked like the point would be in the top of the ravine. (Figure 5) Then, one kept at the correct point, given by the compass, and the others would go look around to see if they would find the point. A long time passed doing that until Martin Goettl - one of the instructors - came and showed that the point was not on the top of the ravine, but on its bottom.

|

| Figure 5 - Location of point 3 on the contour lines. |

After analyzing better the contour lines, it was possible to notice that this was correct and could have been avoided. Two lessons were learned with that: do not doubt your compass - errors might be made, but they would not put you that far of track, as long as you keep careful with it. And also to analyze more carefully the information in the map, it doesn't help to have a very detailed map if the reader doesn't take the necessary time to interpret it.

At this point, it was already more than 5:00 PM, so Martin decided to take the group to the next point while still wasn't dark, and then go back to avoid the darkness.

Conclusion

The main learning obtained in this exercise was about the compass reliability. It was disappointing not to accomplish the goal of the project, however, it was important to make this mistake and recognize it. That way, it's guaranteed that the idea behind the error is understood and it won't be committed again. The idea of misplacement by user lack of precision is right, however, it wouldn't cause big consequences, so it's always essential to trust the compass and don't doubt that much the location you'll be placed.

The contour lines were part of the map to support the area identification, and they would be really useful if the correct analysis was made. Another lesson comes with that: only put information in a map if the reader is able to understand and interpret it correctly, otherwise it can be more confusing than helpful. In the case of this project, the users were knowledgeable about the interpretation of contour lines, and the problem was that it was necessary to be more careful in the reading. However, it's important to understand this relativity of information provided in a map, depending on the target audience. If something is supposed to be released for the public, it might not be a good idea to insert technical concepts and features.

For last, it's important to recognize the positive sides of using a compass and map: even inside the woods, the precision is not compromised. Sometimes, when inside a really dense forest, a GPS unit can have an error too high or even not acquire satellites enough to display the coordinates. However, technology keeps developing more and more to avoid these problems. The downside is that to navigate with compass and a map is a very time demanding technique. Nowadays, the world requires efficiency at a high rate, so more practical solutions replace this method.